A Primer on Systems Thinking

In our increasingly interconnected world, the ability to see the big picture is more than just an advantage—it's a necessity. Thinking in Systems: A Primer by Donella Meadows is a crucial read for those who seek to understand the complex webs that control our environment, societies, and economies. This summary distills the essence of Meadows' insights, offering a clear and accessible exploration of how systems work, how they fail, and how they can be improved. Whether you're a student, professional, or simply a curious mind, this book, and consequently this summary, will equip you with the tools to think more broadly and act more effectively in the world. By grasping the principles laid out by Meadows, you'll be better prepared to tackle challenges, large and small, and to appreciate the intricate systems that influence our daily lives and our future.

What Is a System?

A system is made up of three parts:

- Elements: These are the parts or components within the system.

- Interconnections: These are the ways in which the elements relate and link to each other.

- Purpose or Function: This is what the system is supposed to do or achieve.

To define it more cohesively, a system is a set of elements that is interconnected in a way that achieves its function.

Systems are everywhere in the world.

- For example, a soccer team is made up of players, each with their own role that connects with the others. But the team is more than just players; it includes coaches, staff, and fans too.

- A business is a system where people, machines, and information come together to meet the company's objectives. This business is also a part of the bigger system of the economy.

Stocks and Flows

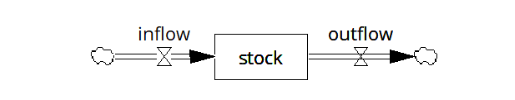

Stocks and flows are the basic building blocks of any system.

Think of a stock as a visible, countable, or measurable element in a system, like a pile of goods or a quantity waiting to be used.

Flows are the processes that change stocks over time. Incoming flows add to the stock, while outgoing flows reduce it.

Imagine a bathtub to understand this concept:

- The stock is how much water the tub holds.

- The inflow is the water running from the tap, increasing the stock.

- The outflow is the water going down the drain, decreasing the stock.

Here's what it looks like in a diagram:

This idea applies to other systems too:

- In the case of fossil fuels, the stock is the underground oil. Drilling reduces this stock, while natural formation adds to it.

- The world’s population is a stock too. It grows with every birth and decreases with each death.

Properties of Stocks and Flows

Stocks don't change instantly. Consider the time it takes to fill or empty a bathtub. Turning the tap is quick, but filling the tub takes longer.

Why is that? Because flows need time to happen. Hence, stocks change slowly. They absorb shocks and provide stability to the system.

From our perspective, this stability is a mixed blessing. On one hand, stocks offer security. They allow for imbalances between inflows and outflows temporarily.

- Your bank account is a stock of money that gives you financial security. Lose your job, and the money stops coming in, but you can still use your savings while you find a solution.

On the flip side, the slow change of stocks means you can’t expect immediate transformation.

- For instance, if jobs become obsolete due to new technology, retraining the workforce won’t happen right away. It takes time for new information and skills to spread through the system.

Where We Focus

When we observe systems, we usually pay more attention to stocks than flows, and even more so to what comes into the system than what goes out.

- For example, we often consider the rise in global population to be due to more births. We less frequently consider that improving healthcare, which reduces deaths, also contributes to population growth.

- Similarly, a business aiming to grow its workforce might look to hire more people without giving as much thought to reducing staff turnover through fewer resignations or layoffs.

This tendency to overlook the complexities of systems often leads to a shallow understanding of how to effectively influence them.

Feedback Loops

Systems often display behaviors that stay consistent over time. Sometimes, they seem to correct themselves, keeping everything balanced. Other times, they either grow rapidly or shrink just as fast.

When we see such steady behaviors, they're usually driven by feedback loops. Feedback loops happen when changes in a stock influence its own flows.

Balancing Feedback Loops (Stabilizing)

These are also called negative feedback loops or self-regulation mechanisms.

Balancing feedback loops keep a stock at a certain preferred level. If the stock strays from this level, the flows adjust to bring it back.

- If the stock falls too low, the system increases inflows or reduces outflows to raise the stock.

- If the stock goes too high, the system reduces inflows or increases outflows to lower it.

An easy way to understand this is by thinking of maintaining the water level in a bathtub.

- If the water is too low, you stop the drain and turn on the taps.

- If it's too high and spilling, you unplug the drain and turn off the taps.

Reinforcing Feedback Loops (Runaway)

These are known as positive feedback loops or processes that lead to growth or decline that feeds on itself, like snowballing or compound growth.

Reinforcing feedback loops do the opposite of balancing ones—they make the changes in stock even bigger, causing it to increase or decrease even faster.

- If a stock goes up, the inflows also go up (or outflows go down), leading to even more increase.

- Conversely, if a stock goes down, the inflows decrease (or outflows increase), leading to a quicker decrease.

Examples of positive runaway loops include:

- The larger the population, the more it tends to grow, increasing the population further.

- A healthy economy can grow this way too. More factories and workers mean more production, which means more can be invested in building even more factories and training people.

Examples of negative runaway loops:

- In farming, plants' roots hold the soil together. As soil erosion worsens, fewer roots can grow, leading to more erosion.

- During emergencies, if a store discounts items like toilet paper, the less there is in stock, the more people want to buy it, which then depletes the stock even faster.

Building More Complex Systems

The simple concepts of stocks, flows, and feedback loops can be combined to form more complex systems that mirror the intricacies of the real world. Here, we'll discuss a basic example to illustrate how analyzing systems can help us understand how they behave.

One Stock with Competing Loops

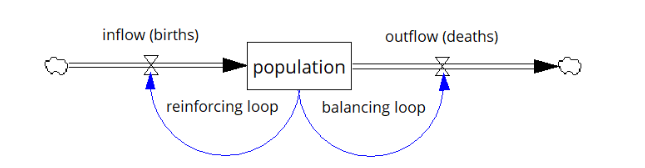

Let's consider a system with a single stock and two competing feedback loops—one reinforcing and one balancing.

We'll use the global population as our example:

- The population itself is the stock.

- Birth rates make up the reinforcing loop—as the population increases, so does the number of births, leading to potential exponential growth.

- Death rates form the balancing loop—as the population increases, so do the number of deaths, which could counteract growth.

Here's a diagram to represent the stock and flow:

The behavior of the population in this model depends on the relative strengths of the birth and death loops:

- If more people are born than die, the population will grow rapidly, which is the current global trend.

- If more people die than are born, the population will decrease, which could happen if birth rates drop dramatically or death rates increase significantly.

- If births and deaths balance out, the population will stabilize.

Various factors can influence these loops:

- Wealthier nations tend to have lower birth rates, so as countries develop economically, birth rates might decrease.

- Deadly diseases can cause death rates to soar, as seen during the HIV/AIDS crisis.

- Social choices or health issues could also reduce the birth rate.

More Complex Systems

If you're interested in delving into more intricate systems and their behaviors, the full book goes over:

- A system with one stock and two balancing loops works like a thermostat regulating room temperature.

- Delays can cause oscillations, similar to a car sales manager maintaining inventory levels.

- To model the extraction of finite resources like oil.

- To model the harvesting of renewable resources, such as fish populations.

Why Systems Work Well

Systems can achieve their goals impressively, enduring over time and adapting to changes in their surroundings. Their success comes down to three characteristics:

- Resilience: This is the system's capacity to recover from challenges.

- Self-organization: This is the system's ability to become more intricate and better over time.

- Hierarchy: This is how a system is organized into different levels of smaller systems within larger ones.

Ignoring these qualities can make systems fragile and unable to cope with change.

Resilience

Think of resilience as how well a system can keep functioning across different situations. The more situations it can handle, the more resilient it is. Take the human body as an example: it can fight off illnesses, heal wounds, and survive in various climates and diets.

Resilience is supported by feedback loops at various levels:

- Basic feedback loops help the system maintain itself. To boost resilience, a system might have several loops ready to step in for each other, working in different ways and times.

- There are higher-level loops that fix or improve the basic ones.

- And there are even higher-level loops that enhance those higher-level loops.

Often, we design systems prioritizing efficiency over resilience, removing what we see as unnecessary feedback loops. This can make a system delicate, where even small issues can cause big problems.

Self-Organization

Self-organization is a system's ability to increase its own complexity, allowing it to evolve and improve. The evolution of life on Earth is a perfect example, from simple chemicals to complex organisms like humans.

Some organizations limit self-organization, maybe because they want uniformity for better performance, or they fear instability. This can lead to workplaces where employees are just cogs in a machine, not encouraged to think or disagree.

Stifling self-organization can reduce a system's resilience and its capacity to adapt to new challenges.

Hierarchy

A hierarchical system is one where smaller systems are nested within bigger ones. For instance:

- Cells are parts of organs.

- Organs are parts of your body.

- And you are part of larger systems like your family and community.

In a well-set-up hierarchy, the smaller parts mostly look after themselves while contributing to the bigger system. The bigger system's job is to coordinate the parts to help them function better together.

Organizing into a hierarchy can make a system more efficient since each part can manage its own affairs without needing too much direction from the top.

Problems can result at both the subsystem or larger system level:

- If the subsystem optimizes for itself and neglects the larger system, the whole system can fail. For example, a single cell in a body can turn cancerous, optimizing for its own growth at the expense of the larger human system.

- The larger system’s role is to help the subsystems work better, and to coordinate work between them. If the larger system exerts too much control, it can suppress self-organization and efficiency.

How We Fail in Systems

We study systems to predict and influence their outcomes, but often systems behave in unexpected ways.

The problem lies in our desire for simplicity and our limited ability to grasp complexity. We prefer straightforward cause-and-effect reasoning and short-term thinking, which blinds us to the full consequences of our actions.

These limitations hinder our ability to see the true nature of things. They prevent us from creating well-functioning systems and from making effective changes within them.

Systems with similar patterns often encounter similar types of problems. Here are a couple of examples, with more detailed ones available in the book.

Escalation

Also known as: Keeping up with the Joneses, arms race

Imagine competitors each with their own pile of something they value. They all want the biggest pile. If one falls behind, they strive to outdo the others.

This creates a reinforcing loop—each increase in one pile drives the others to increase theirs, potentially leading to excessive costs and waste until someone gives up or collapses.

During the Cold War, for example, the Soviet Union and the United States kept increasing their weapon stockpiles, spending trillions. Or consider how companies often escalate advertising to outshine their competition.

Fixing Escalation

The key is to weaken the feedback loop where competitors react to each other.

One method is to agree mutually to stop the competition. Even with potential mistrust, a successful agreement can end the escalation and reintroduce balancing feedback that prevents uncontrolled growth.

If negotiation is not an option, then it might be best to opt-out of the competition. The other parties react to your actions. By intentionally maintaining a lower stock, they may be satisfied and halt their escalation. This does require you to be able to weather the stock advantage they have over you.

Addiction

Also known as: dependence, shifting the burden to the intervenor

In a system, sometimes a participant faces a challenge. Normally, they would have to resolve this issue on their own. But occasionally, an outside helper steps in to provide assistance.

This help is not inherently negative, but in cases of addiction, the helper's assistance actually reduces the participant's own ability to solve the problem. This could be because the help only addresses the symptoms and not the root cause, or it might prevent the development of the participant's own capabilities.

Initially, the problem might seem to be resolved, but it usually resurfaces, often worse than before because the participant's problem-solving ability has diminished. The helper must then provide even more assistance, starting a reinforcing feedback loop where the participant becomes increasingly dependent on the help.

A real-world instance is the shift in elder care in Western societies. Families used to care for their elderly, but with the introduction of nursing homes and social security, they began to rely on these services, losing the skills and inclination to provide care themselves.

Fixing Addiction

To address this when intervening in a system:

- Aim to understand the underlying cause of the system's issue.

- Craft an intervention that tackles the root problem without impairing the system's own problem-solving capacity.

- Plan to phase out your involvement in the system once you've intervened, allowing it to operate independently.

More Systems Problems

If you're interested in learning about more systems problems, the book includes a discussion of:

- Policy resistance: When policies fail to impact the system as actors resist change. An example is the ongoing war on drugs.

- The rich get richer: This refers to situations where the successful party gains more resources and continually outpaces the competition, like in monopolistic markets.

- Drift to low performance: When standards are based on past performance rather than absolute measures, leading to a continuous decline. For instance, a company losing market share might justify it by comparing it to only slightly better past performance.

Improving as a Systems Thinker

Learning to think in systems is a lifelong process. The world is so endlessly complex that there is always something new to learn. Once you think you have a good handle on a system, it behaves in ways that surprise you and require you to revise your model.

And even if you understand a system well and believe you know what should be changed, actually implementing the change is a whole other challenge.

Here’s guidance on how to become a better systems thinker:

- To understand a system, first watch to see how it behaves. Research its history—how did this system get here? Get data—chart important metrics over time, and tease out their relationships with each other

- Expand your boundaries. Think in both short and long timespans—how will the system behave 10 generations from now? Think across disciplines—to understand complex systems, you’ll need to understand fields as wide as psychology, economics, religion, and biology.

- Articulate your model. As you understand a system, put pen to paper and draw a system diagram. Put into place the system elements and show how they interconnect. Drawing your system diagram makes explicit your assumptions about the system and how it works.

- Expose this model to other credible people and invite their feedback. They will question your assumptions and push you to improve your understanding. You will have to admit your mistakes, redraw your model, and this trains your mental flexibility.

- Decide where to intervene. Most interventions fixate on tweaking mere numbers in the system structure (such as department budgets and national interest rates). There are much higher-leverage points to intervene, such as weakening the effect of reinforcing feedback loops, improving the system’s capacity for self-organization, or resetting the system’s goals.

- Probe your intervention to its deepest human layers. When probing a system and investigating why interventions don’t work, you may bring up deep questions of human existence. You might bemoan people in the system for being blind to obvious data, and if only they saw things as you did, the problem would be fixed instantly. But this raises deeper questions: How does anyone process the data they receive? How do people view the same data through very different cognitive filters?

Member discussion